The History of Computers: From Early Calculations to Intelligent Machines

Computers are so deeply woven into our lives today that it’s hard to imagine a world without them. From smartphones and laptops to cloud servers and AI systems, computers quietly power almost everything we do. But this journey didn’t start with modern machines — it began thousands of years ago with simple ideas about counting, logic, and automation.

Table of Contents

- Early Foundations: Before Electronic Computers

- First Generation Computers: Vacuum Tubes (1940s–1950s)

- Second Generation Computers: Transistors (1950s–1960s)

- Third Generation Computers: Integrated Circuits (1960s–1970s)

- Fourth Generation Computers: Microprocessors & Personal Computers (1970s–1990s)

- The Internet Age: Connectivity & Information Explosion (1990s–2000s)

- Modern Era: Cloud, Mobile, and Artificial Intelligence (2010s–Present)

- Conclusion: From Tools to Thinking Systems

1. Early Foundations: Before Electronic Computers

Long before electricity, humans still had problems to solve — counting money, tracking time, measuring land. The abacus was one of the earliest tools created for this purpose, and it worked so well that it’s still used in some places today.

In the 1600s, inventors like Blaise Pascal started building machines that could do math automatically. These devices weren’t fast or flexible, but they proved one important idea: machines could help humans think.

Later, Charles Babbage imagined a machine that could follow instructions, not just calculate numbers. Ada Lovelace realized this machine could process logic, making her the first person to describe programming. Even though the machine was never completed, the idea changed history.

2. First Generation Computers: Vacuum Tubes (1940s–1950s)

The first real electronic computers appeared in the 1940s, mostly because of war and scientific needs. Machines like ENIAC filled entire rooms and used thousands of vacuum tubes.

They were powerful for their time, but also fragile. One faulty tube could crash the whole system. Programming them was slow and painful, but they proved that electronic speed could outperform human calculation.

This was the moment computers stopped being an idea and became a reality.

3. Second Generation Computers: Transistors (1950s–1960s)

Transistors replaced vacuum tubes and made computers more practical. They used less power, produced less heat, and failed far less often.

This era also made computers easier to program. Languages like FORTRAN allowed people to write instructions in something closer to human language. Businesses began using computers for real-world tasks like payroll and inventory.

Computers were still expensive, but they were finally useful outside research labs.

4. Third Generation Computers: Integrated Circuits (1960s–1970s)

Integrated circuits placed many transistors onto a single chip. This dramatically reduced size and cost while boosting performance.

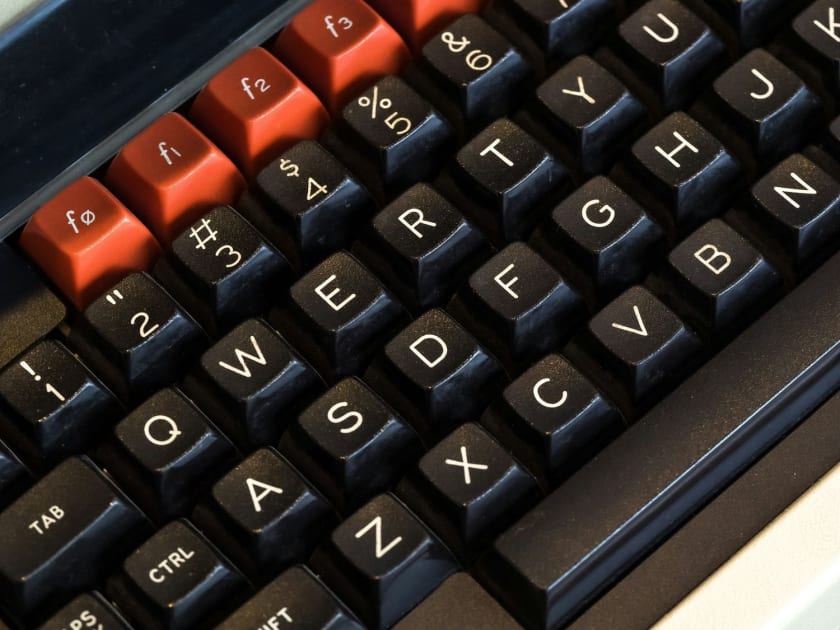

For the first time, computers could multitask and interact with users in real time. Screens and keyboards replaced punch cards. Computers became tools people could actually sit down and use.

This stage quietly set the foundation for everything we use today.

5. Fourth Generation Computers: Microprocessors & Personal Computers (1970s–1990s)

The microprocessor changed everything by fitting an entire CPU onto one chip. This led to the rise of personal computers for individuals and small businesses.

The microprocessor put an entire computer brain on one chip. This made personal computers possible.

Suddenly, computers weren’t just for companies — they were in homes, schools, and offices. Software like word processors and spreadsheets changed how people worked. Graphical interfaces made computers usable even for non-technical users.

This was the moment computers became part of daily life.

6. The Internet Age: Connectivity & Information Explosion (1990s–2000s)

When computers connected to each other, everything changed. Email replaced letters, websites replaced libraries, and online businesses reshaped the economy.

The internet turned computers into communication machines. Information became global, instant, and searchable. New careers and industries were born almost overnight.

Computers were no longer optional — they were essential.

Computers were now central to education, entertainment, and commerce.

7. Modern Era: Cloud, Mobile, and Artificial Intelligence (2010s–Present)

Today’s computing happens everywhere — from smartphones to cloud data centers. Cloud computing enables scalable services, while mobile devices put computing power in every pocket.

Artificial intelligence has shifted computers from rule-following machines to learning systems. Modern computers can analyze data, understand language, and assist decision-making.

Technologies like AI agents, edge computing, and automation are shaping the future of human–computer interaction.

Conclusion: Why This History Matters, From Tools to Thinking Systems

The history of computers isn’t just about machines — it’s about humans trying to solve problems better. Every generation of computers reflects human creativity, limitations, and ambition.

Today, computers are no longer just tools — they are partners in creativity, productivity, and problem-solving. Understanding this history helps us better navigate and shape the future of technology with responsibility and insight.

Understanding this journey helps us appreciate what we have today and reminds us that technology is shaped by choices. As computers grow smarter, the responsibility to use them wisely grows too.

The story isn’t finished — and we’re all part of what comes next.